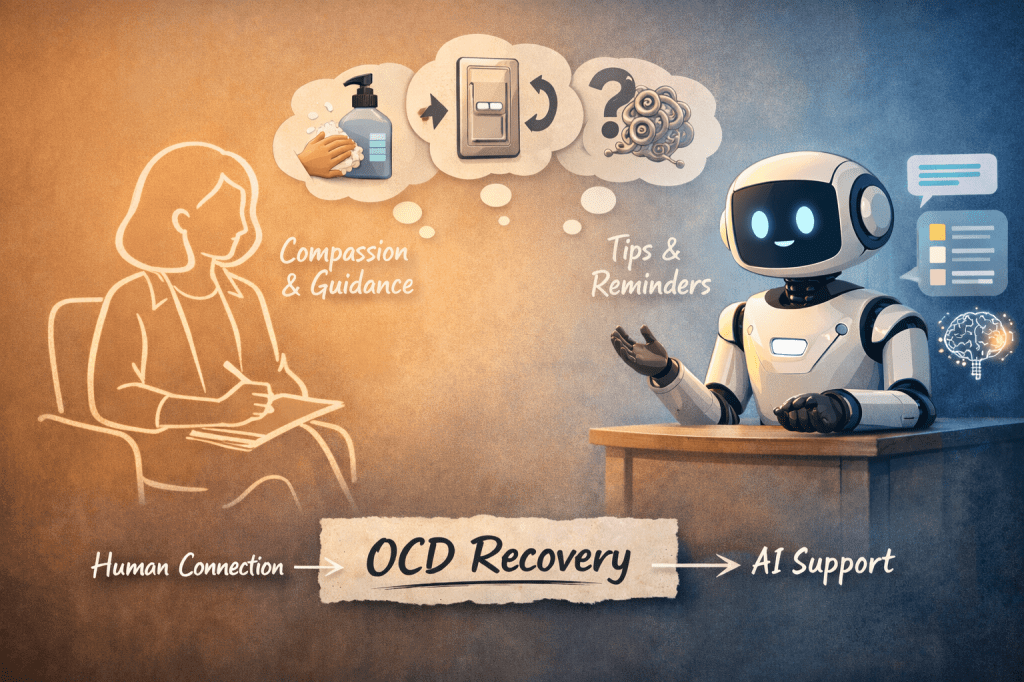

AI is increasingly being talked about in mental health spaces, and people with OCD are understandably curious. If an app or chatbot can explain intrusive thoughts, talk through exposure exercises, or offer reassurance in moments of anxiety, it can start to feel like therapy itself is becoming optional.

As someone who works with OCD using CBT and ERP, I think the truth sits somewhere more nuanced — and far more hopeful — than the idea of replacement.

AI can help people with OCD.

It just can’t do the work that actually changes OCD.

One of the genuinely helpful things AI can do is explain OCD clearly. Many people arrive in therapy after years of confusion and shame, convinced that their thoughts mean something terrible about who they are. Having access to explanations about intrusive thoughts, the OCD cycle, and why reassurance keeps the problem going can be hugely relieving. It can normalise experiences that people have often been too frightened to say out loud.

AI can also be useful between therapy sessions. OCD treatment doesn’t happen in the therapy room alone — it happens in everyday moments when someone resists a compulsion, sits with anxiety, or chooses uncertainty on purpose. Used carefully, AI tools can help people reflect on exposures they’ve done, notice patterns, or stay anchored to the principles of ERP when OCD is loud and convincing.

For people waiting for specialist treatment, this kind of support can feel like a lifeline. It can reduce isolation and help someone feel less alone with their thoughts while they’re taking steps toward proper care.

But this is also where the limits start to become clear.

OCD is exceptionally good at disguising itself as common sense, self-care, or even recovery. A key part of my job as a therapist is spotting when something that looks helpful is actually a compulsion in disguise. Reassurance-seeking, checking, mental reviewing, subtle avoidance — these are often invisible to the person doing them. AI, no matter how sophisticated, struggles here. It can accidentally reassure, validate certainty-seeking, or engage in endless “what if” conversations that keep OCD alive rather than weaken it.

ERP is not just about facing fears. It’s about doing so in a way that reduces compulsions rather than reinforcing them. That requires careful formulation, pacing, and adjustment. Sometimes the bravest thing in OCD therapy is not to answer a question, not to soothe, and not to rescue someone from discomfort. That kind of therapeutic restraint is deeply human and deeply relational.

There is also something important about being with another person who can tolerate your distress without trying to make it go away. OCD therapy often involves sitting in uncertainty together — allowing anxiety, doubt, and fear to exist without rushing to fix them. A therapist can hold that space, notice when things shift, and help repair moments when therapy itself becomes difficult. AI cannot do that in the same way, because it does not carry responsibility, judgment, or emotional presence.

Perhaps most importantly, effective OCD treatment is personal. Two people might both have contamination fears, but what those fears mean — responsibility, morality, danger, disgust — can be entirely different. Therapy works because it responds to the individual, not the label. AI responds to patterns in language, not the lived experience underneath them.

When AI is framed as a replacement for OCD therapy, it risks being harmful. People can end up stuck in cycles of reassurance, overly intense self-directed exposure, or increased shame when symptoms don’t improve. OCD is not a logic problem that disappears with the right explanation. It’s a learning problem that changes through repeated experience, support, and courage over time.

A more helpful way to think about AI is as an assistant, not a therapist. It can support learning, reinforce principles, and help people stay engaged between sessions. It can widen access and reduce barriers. But it should never pretend to do the work that belongs in a therapeutic relationship.

CBT for OCD works because a trained therapist helps someone face uncertainty, resist compulsions, and build trust in their own capacity to cope — again and again, over time. That process depends on formulation, judgment, and human presence.

AI can help with OCD.

It just can’t replace the human work of recovery.